Questions & Answers

Azure Cognitive Services - Questions & Answers

As you know, here at AppGenie, we're a multi-cloud app dev practice. While we love working with Salesforce (obviously!), we’re all about diving into other technologies as well, and today, we’re turning our attention to Azure Cognitive Service for Language and the Question Answering capability (previously called LUIS or Language Understanding)

So, what is a Languages Services, Question Answering, LUIS?

In short, it’s a way of teaching machines how to understand human language. But it’s not just about tossing text into an algorithm and calling it a day. Question Answering takes input, like text or

voice commands, and breaks it down into something the system can work with. It identifies things like user intent, key entities (names, dates, locations, etc.), and context,

making interactions with machines feel a whole lot more human. And this matters because, let’s face it, nobody wants to chat with a bot that doesn’t get what they’re saying.

From here on out – Question Answering from Azure Cognitive Service for Language is going to get shortened significantly to ACLQA!

Let’s break down how ACLQA makes that happen and why it could be a game changer for your next app or bot project. Our example is arbitrarily simple – were adding the ability to find a song title

based on some search parameters using ACLQA, to do this we'll set up a webpage where users can type in partial song lyrics, an album name, or artist name. Then we will process this input,

identify the user's intent (like finding a song or artist), and then call the Genius API to retrieve the matching song title and lyrics.

To keep this example short Ill focus on the first part of the problem and leave the actuall coding solution for another post. So lets focus on setting up Azure Question Answering.

Setting Up Azure Question Answering

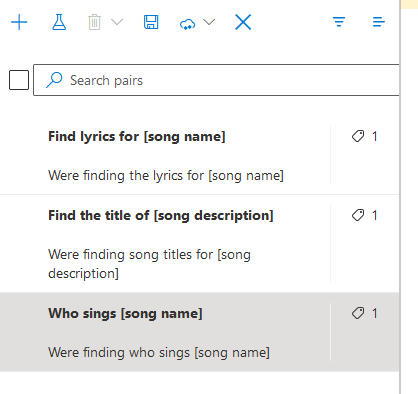

The Question Answering service allows you to upload documents or provide content that the AI can refer to in order to answer user questions. We’ll use this service to enable the AI to understand and respond to queries like:

-

"What are the lyrics to [song title]?

-

"Who sings [song name]?

-

"Give me the lyrics for the song [partial lyric]?

Lets get started with our step by step.

-

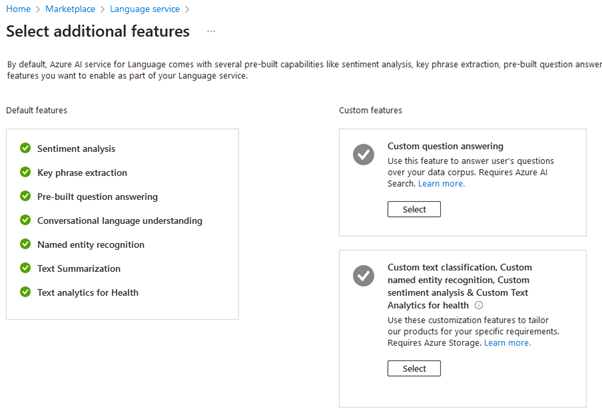

Create an Azure Account and Set Up Question Answering:

Head to the Azure Portal, and create an Azure Cognitive Services resource.

-

Select Custom Question Answering as the service:

-

Continue through the Wizard:

Follow your nose for the rest of the selections – if youre just starting out use the Free Tier and 3 Indexes as this will get you up and running at a minimal cost. -

On Completion:

On completion of the deployment go to your new “Language” service, | Overview as you will need the Keys and the endpoint.

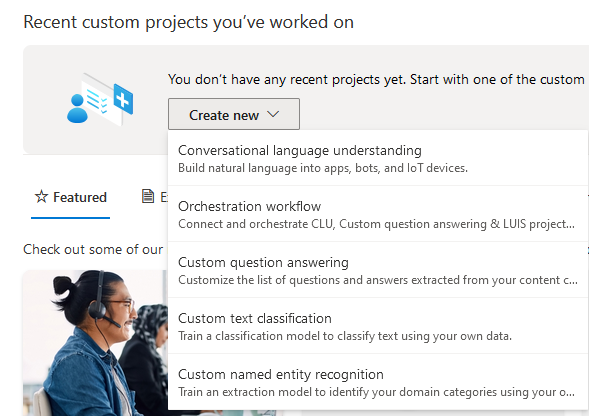

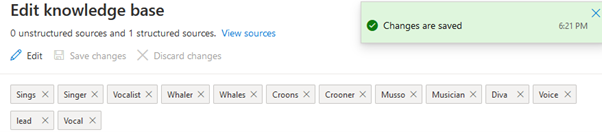

To use Azure Question & Answering, you need to provide it with relevant information for us this is the Questions & Answers so Go to the “Language service you just created open "Language Studio"".

Setting Up Azure Question Answering

Language Studio is a stand alone application that allows you to create your Language content, at this point its an emergent product and Language Studio feels a little less like a unified experience and more like an add on so if you want to avoid the mire of helpful marketing just open this link https://language.cognitive.azure.com/home

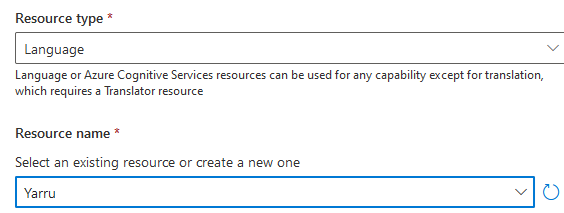

The first thing you have to do is connect your new Cognitive Service, fortunately your prompted to do this in the in App guidance but for those who never read this or cant manage the 4 pages …. Under your account (top right corener) information there’s an option to Select a resource.

Then your basic information – Name, Description and a No answer prompt – just use the defaults, you can always change these later

At this stage you’re prompted to start adding your knowledge base, as per the data sources in the “Search service” this is all about analysing large volumes of data – we actually don’t want this in our example.

How does ACLQA Work?

Were using a subset of capabilities heres so how is ACLQA supposed to work, to generate automated answers with flexibility to handle variations in how questions are asked?

-

Ask a Question:

You send a question to the Answer Services API, let’s say, “What are the business hours?”. -

Knowledge Lookup:

The service searches your knowledge base: This knowledge base is a collection of question-answer (Q&A) pairs that you’ve already set up. You’ve likely uploaded FAQs or documents containing relevant info. -

Question Match:

It matches your question with a response: Using AI-driven natural language processing, -

Finds match Response:

ACL finds the most relevant answer in your Q&A pairs, even if your question is worded differently than the original (e.g., you ask “When are you open?” but the knowledge base answer is for “business hours”). -

Returns Response:

It returns the response that best matches, “We’re open from 9 AM to 5 PM on weekdays.” -

Optionally:

You can set a confidence score threshold so responses only return if they’re confident enough and customise Q&A pairs to improve accuracy.

-

Ask a Question:

You send a question to the Answer Services API, let’s say, “What are the lyrics for that Beers song from that band from NZ?”. -

Question Match:

The searches will be interpreted by the A to match a Q&A pair – when it does this there will be no matching content so it will only return the QA response.

Adding Questions

Lets add our questions and test the responses. Firstly lyrics

Lets see what adding these Synonyms does in terms of our success rates, to do this click Synonyms

Limitations?

Okay so now the bad news – Cognitive Services is NOT machine learning, so it does not out of the box learn from answers that are correct or not. However, there are ways to improve and fine-tune the system over time. Here’s how you can manage and enhance its accuracy:

-

Manual Training:

Azure Answer Services requires some manual tuning. You can use the feedback and analytics tools in the Azure portal to see which questions received inaccurate or lower-confidence answers. Based on this, you can refine your Q&A pairs or add variations to existing questions that might better capture common phrasings users might use. -

Alternate Questions and Variations:

: If you see a recurring issue where users phrase questions differently, you can add these variations directly into your knowledge base under each Q&A pair. This helps the service better understand different phrasings for the same intent. -

Active Learning (Semi-Automated Improvement):

Azure does offer an “Active Learning” feature. This doesn’t directly learn from correct answers, but it collects usage patterns and highlights questions that often receive low-confidence responses or are marked incorrect by users. It then suggests modifications, such as adding alternative phrasings or clarifying ambiguous Q&A pairs. -

Regular Retraining:

Periodically retraining and updating your Q&A pairs helps. Even though it doesn’t learn autonomously, updating based on insights you gather (like common low-confidence questions) can help make it more accurate over time.

Summary

Using the ACLQA approach, provides us with a Question and Answer.

However, it does not identify intent (unlike LUIS which it has replaced).

This is a serious problem, given that with the current approach, significant text manipulation is required to resolve this.

If youre after intent resolution - theres easier ways to do this – Ill save that post for next month.

And if youre interested in the Answer - then Beers by Deja Voodoo https://www.youtube.com/watch?v=FfNIOlX-K0s

As always thanks for reading and reach out if you need any help.