Git Source Pipeline

Okay this is the (hopefully) last in my series on git. Next month we'll be focusing on how to make Copado cope with the totally bizarre branching mechanism you have put together with all you have learnt about git! For a refresher head over to "love it or hate it" to start your git journey then follow up with "how scary can it get".

If you're still here then its time to string some git commands together to actually do something interesting, I was tempted to say exciting but I'm resisting my inner geek.

What we want to do, and why?

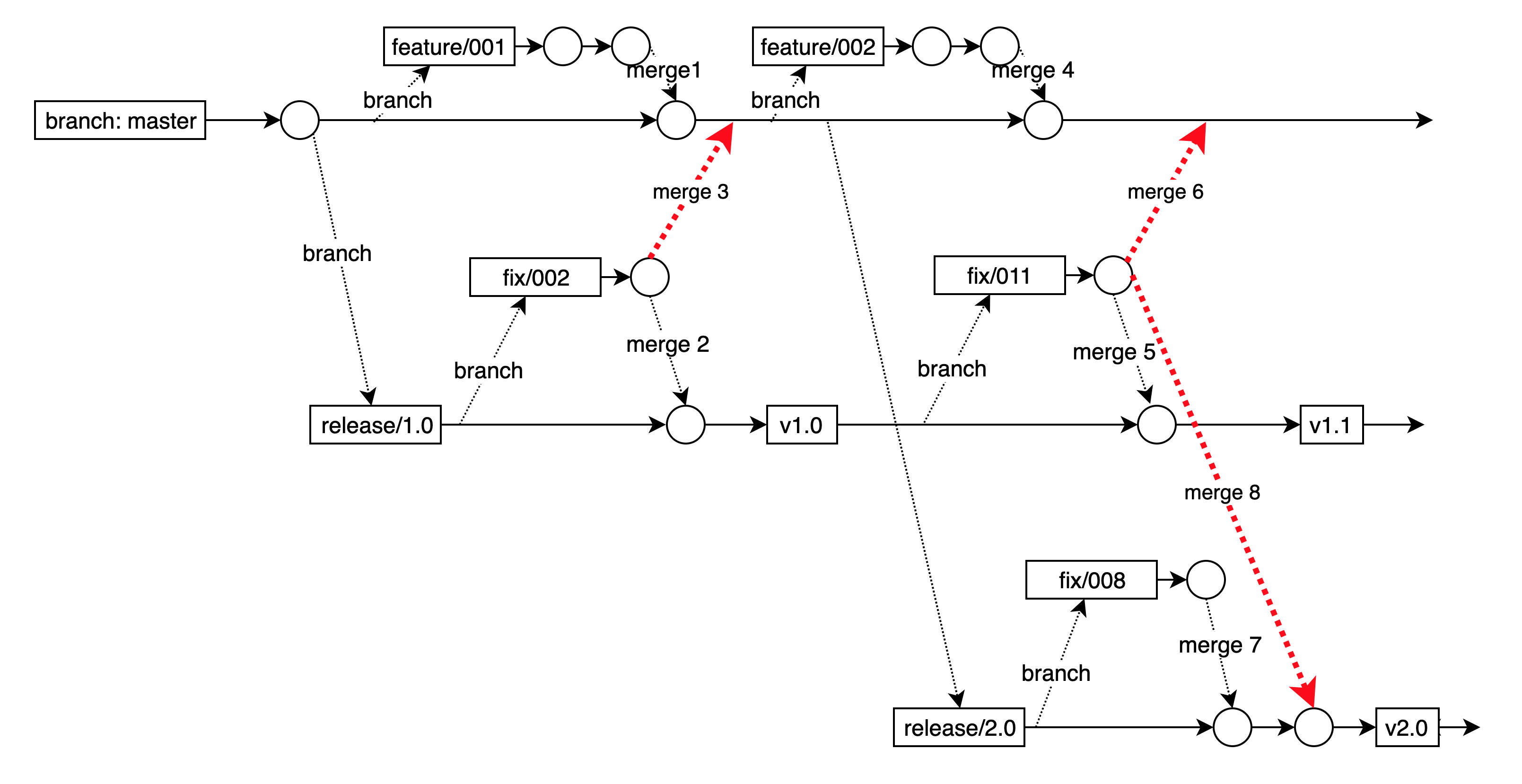

So what we are trying to achieve is to synchronize a number of development streams into a git repo - this isn't your usual DevSecOps pipline! So why would we want to do this? I'm glad you asked that question .... and I'm going to give you a simple use case to illustrate.

FTP2SF

I'm proud of FTP2SF, AppGenies' proprietary Salesforce file transfer App. Its a fantastically powerful and easy to use integration tool that allows a Salesforce Developer or user to access data on FTP, SFTP, FTPS, Azure Blob or AWS S3 data. In what spare time we have were adding DropBox, Box and Micrsoft Graph for OneDrive and Sharepoint online in the next release. Its the most cost effective integration platform on the AppExchange and leverages our love of AWS Lambda and Salesforce.

#SalesPlugOff

When we perform a release of FTP2SF, we release everything ... multiple Lambda functions across multiple AWS Regions, Salesforce Managed package build, our support website and to make sure it all works we execute a bunch of automated tests that start EC2 instances across regions and services, Upload, download, append, delete, rename, etc from these verifying the response and requests to make sure its all tickity-boo.

None of these live in the same git repo so when we want to drop a test build .... we could do this all manually but we love DevSecOps so .... we script it, starting with a target repo so we can coordinate everything and make sure that we have a copy of our release available so that we can hotfix if necessary.

Into the Action

Clone the Repos

Firstly lets grab the set of repos that we need, including our production release repo. We start by Cloning everything we

need into the current directory of our AzureDevOps container - I havnt dumped the YAML for this as I want this article to

be about GIT, but yes - we wrap this into an Azure DevOps Pipeline that we currnetly fire manually.

git clone https://.../FTP2SF/_git/Build

git clone https://.../FTP2SF/_git/Website

git clone https://.../FTP2SF/_git/Salesforce

git clone https://.../FTP2SF/_git/Services

git clone https://.../FTP2SF/_git/Samples

Create a Feature branch

Now that we have the latest version of master for each of the Repos, we Create a Feature Branch in our build Repo so that we have a place to do all this stuff.

We do this based on the date and time.

git checkout -b feature/$(date +%d%b%y-%H%M%S)

Copy the code

We then need to generate our specific code branches into this feature branch, compile if necessary and commit

rsync -av --exclude='.*' Website/ Build/Website

This command will copy all the content from the website directory to the build/website directory, excluding any hidden directories and files.

Here's what each argument means:

-

-a

Archive mode. Preserves all file attributes, including permissions, timestamps, and ownerships. -

-v

Verbose mode. Prints the details of the files being copied. -

--exclude='.*'

Excludes any file or directory that begins with a dot (hidden files and directories). -

Website/

Specifies the source directory. Note that the trailing slash after Website/ is important. It tells rsync to copy the content of the Website directory and not the Website directory itself. -

Build/Website

Specifies the destination directory.

Build the Distributables

To execute a build we run the dotnet build commmand, we do this for the websites and the Lambda functions, the Lambdas will get built again once we

hit the AWS CLI for deployment but this way we either get a clean build or we fail at the same point.

dotnet build --runtime win-x64

Commit everything

Okay the rest is easy from here, we just need to commit everything and push to our Build repo, the only trick is we need to find the current branch name first!

# Get the name of the current branch

current_branch=$(git rev-parse --abbrev-ref HEAD)

# Create a commit with the desired message

commit_message="Release Build $(date +%Y-%m-%d\ %H:%M:%S)"

git add .

git commit -m "$commit_message"

# Push the changes to the upstream master branch

git push --set-upstream origin "$current_branch:master"

-

git rev-parse --abbrev-ref HEAD

This command gets the name of the current branch and assigns it to the variable current_branch -

commit_message="Release Build $(date +%Y-%m-%d\ %H:%M:%S)"

This command creates a variable called commit_message with the desired commit message and current date and time. -

git add .

Stage all files in the location and all sub-directories. -

git commit -m "$commit_message"

Commit the staged files using the commit_message -

git push --set-upstream origin "$current_branch:master"

This command pushes the changes to the upstream master branch and sets the upstream branch for the current feature branch. The $current_branch:master part specifies that the local branch $current_branch should be pushed to the remote branch <>master. Since the --set-upstream option is used, Git will set the upstream branch for the local branch to origin/$current_branch.

Create a Pull Request

Now lets PR and merge our changes to master, this allows the Azure Dev Ops pipeline to be triggered and do the heavy lifting of deploying.

# Create a pull request for the upstream branch

hub pull-request -b master -m "$commit_message"

# Merge the pull request

hub merge "$(hub pr list -h $current_branch -f "%I")"

# Push the changes to the upstream master branch

git push origin master

Here's what each command does:

-

hub pull-request -b master -m "$commit_message"

This command creates a pull request for the upstream master branch with the specified commit message. -

hub pr list -h $current_branch -f "%I"

This command gets the ID of the pull request for the current branch. -

hub merge "$(hub pr list -h $current_branch -f "%I")"

This command merges the pull request with the specified ID. -

git push origin master

This command pushes the merged changes to the upstream master branch.

Finally

So why didn't we just let the Azure Dev Ops pipeline do the heavy lifting and code this all using YAML? The key is in the git push origin master .... the only difference we have between a full blown production release and a test release is the target branch so ... to do a deployment to our test servers we run git push origin test, everything else remains the same.

This approach also means that all our deployment pipline needs to do is pull the latest version of the already compiled code and deploy to AWS, Salesforce and Azure.

Okay so where does that leave us? Hopefully by now you can see that a combination of scripting and some command line hi-jinx allows you manipulate your git repo to mirror whatever strange deployment and branch merge strategy you have defined. Drop me a line and let me know how you go!

Next month, Im going to put this all together for a Salesforce Coapdo Pipeline that shifts the out of box product to an all new level, as always, reach out and let us know how we can help at AppGenie.com.au.

This is article 3 on GIT, in a series of 3. Please find links to each in the series.